How it works

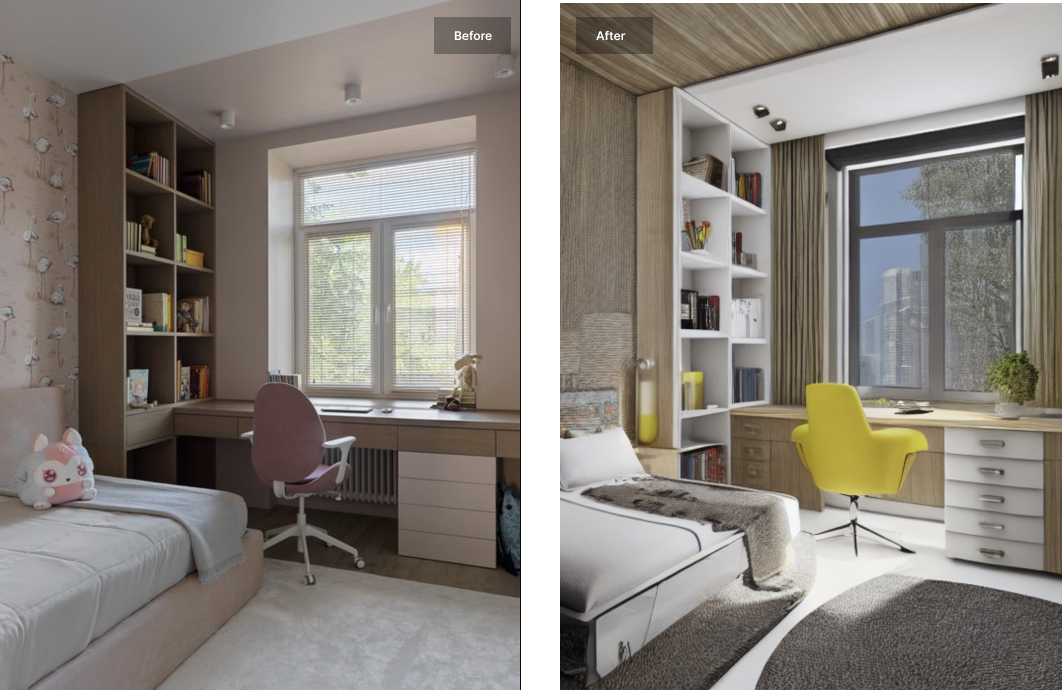

We use ControlNet adapting Stable Diffusion to use M-LSD detected edges in an input image in addition to a text input to generate an output image. The training data is generated using a learning-based deep Hough transform to detect straight lines from Places2 and then use BLIP to generate captions. The Canny model is used as a starting checkpoint and train the model with 150 GPU-hours with Nvidia A100 80G.

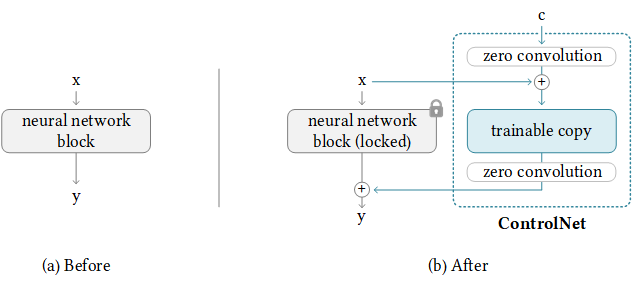

ControlNet is a neural network structure which allows control of pretrained large diffusion models to support additional input conditions beyond prompts. The ControlNet learns task-specific conditions in an end-to-end way, and the learning is robust even when the training dataset is small (< 50k samples). Moreover, training a ControlNet is as fast as fine-tuning a diffusion model, and the model can be trained on a personal device. Alternatively, if powerful computation clusters are available, the model can scale to large amounts of training data (millions to billions of rows). Large diffusion models like Stable Diffusion can be augmented with ControlNets to enable conditional inputs like edge maps, segmentation maps, keypoints, etc.

Original model & code on GitHub